From Zero to Production in less than a day

At Media Distillery we make ample use of the micro-services paradigm. Each of our products is powered by a multitude of microservices that provide functionalities like (semantic) search, data storage, dashboards, EPG correction, face recognition, face clustering, text recognition, thumbnail selection, topic detection, topic segmentation, and many more.

The microservices in our platform can be divided into two groups: Java services and Python services. The latter takes care of the majority of our ML needs; and is the focus of this article. We’ll be going over how we make a new Python service, what such a service looks like and which tools and technologies we use to create and deploy it.

An average Python service

In the simplest case, the pipelines behind our products are separated into two services: a Java service and a Python service. The former takes care of things such as clustering, load balancing, data storage, and many more. The latter hosts the relevant machine learning model(s), taking care of both passing the input, such as an image or a piece of text, through the model and the pre- and post-processing of the input and output. The Java and Python services communicate through a simple HTTP REST API.

Thanks to this setup we can take advantage of the maturity and stability of the Java landscape while giving the ML side the flexibility and freedom to keep up with developments in the quickly changing, and slowly maturing, Python environment.

Tools, Tools, Tools! (and tech)

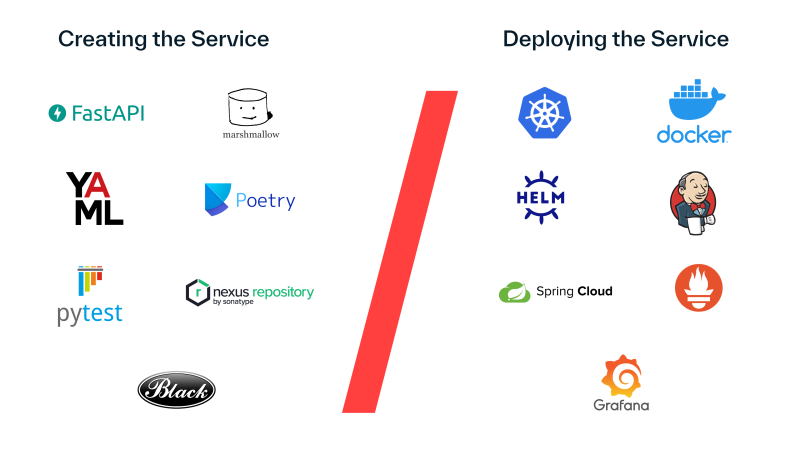

To create and maintain a Python service we make use of a host of open-source tools. For simplicity, we’ve divided the tools that we use into two groups: on one hand, we have the ones used for creating the service and, on the other hand, those used for getting that service onto our platform.

Creating the service

FastAPI

For our HTTP REST framework, we use FastAPI. This framework has been gaining a lot of popularity in recent years and, as we were on the lookout for an alternative, FastAPI seemed like an excellent replacement. What caught our attention was primarily the high performance of the framework, but the overall feature set and design philosophy also scored high in our list.

FastAPI is an optimal candidate, however we’re yet to take full advantage of the asynchronous side of the framework.

Marshmallow / Pydantic

For data serialisation and validation we’ve mainly been using Marshmallow over the years, without any complaints. It has not only proven to improve the overall quality of our services, but also the quality of our prototyping, evaluations and other investigations. Even though it has been a great library, we’ve recently been looking at switching over to Pydantic. Simply because FastAPI supports Pydantic models out of the box (and not Marshmallow).

YAML

For configuration purposes, we make use of YAML files. Not too exciting, but it ties into another configuration tool that comes into play in the deployment phase.

Poetry

Similar to FastAPI, this setup.py replacement has been gaining a lot of popularity in recent years. Although setup.py and setuptools were mostly working fine for us, they were slowly becoming outdated and it was time for us to start looking for a new dependency and package management tool. In the end, we settled on Poetry, which we found very easy to work with and in constant improvement thanks to an active community.

Pytest

Naturally, we also need unit tests for our code. For this, we make use of Pytest, which appears to be the de facto standard when it comes to unit testing in Python.

Nexus

As we need to build a lot of internal Python libraries, it makes perfect sense to store all of them in a shared location. For this purpose, we use Nexus, which provides PyPI repositories out of the box. It is also used for Maven repositories, aligning nicely with our Java and Python-focused services.

Black

Black is a very recent entry to the list of our tools. In the past we haven’t made use of code formatters, but the need for them has been growing. Black, which recently released its first stable version, is slowly becoming the standard for Python code formatting. Our first impressions so far have been good and we’ll likely be using it more often in the future.

Deploying the service

Kubernetes

All our services are deployed as containers to an on-premise installation of Kubernetes. It is mainly managed by our Backend department.

Docker

For creating the deployable containers we use Docker. For each new version of an ML service, we create a new docker image that will expose the API.

Helm

Helm is essentially a layer on top of Kubernetes to ease the deployment and management of services. For each service, in addition to a Docker image to encapsulate the service, a new Helm “chart” needs to be created, which defines how the Docker image should be deployed on Kubernetes.

Jenkins

Jenkins provides a way to automate arbitrary development tasks. For the ML services we have defined various automation pipelines to build, for instance, service snapshots and make new releases. Those pipelines have not only sped up our development time but also eased collaboration between different teams and products.

Spring Cloud Config

As previously mentioned, we use YAML files for configuration. In order to make services easily configurable, it is best to not package their configuration files as part of the Docker image but instead have them hosted at a separate location. To achieve this, we use Spring Cloud Config, a service that connects to a Git repository hosting all our configuration files, making them easily accessible through an HTTP REST API.

Prometheus

To create visibility on the state of our running services we need to expose metrics on that service and monitor them. To achieve this goal we use Prometheus. The metrics are created and exposed using a Prometheus client in each service. This is done together with a separate Prometheus server that pulls these metrics from all client services. Alerts are also configured in the Prometheus server based on those metrics.

Grafana

Grafana is a layer on top of Prometheus, allowing us to create much nicer visualisation dashboards of Prometheus metrics.

Tying it all together

All these tools are great and all, but in the end, what matters most is how they all form a fully-fledged service. To help with this goal, we created a service template.

Our approach

For quite a while, whenever we needed to build some new services, we either started from scratch or copied an existing service. Although the latter can be a time-saving option, it often happens that a given copied service is lagging behind on current standards, transferring over to the new service and creating more technical debt. Furthermore, as we’re working in multiple teams, each group is responsible for different services adopting different standards. This eventually causes more drift between the teams and reduces the maintainability of our services as a whole.

To combat these issues we created a service template, adapted from an existing FastAPI template. Essentially nothing more than a folder containing the file structure that forms a proper service; using the correct tooling. If we want to introduce some new tools, they can be added to the service template, to ensure that all the new services are always up to date with the current standards.

Giving back

The service template has helped us a lot, enabling faster developments and improving the overall quality and consistency of our codebase.

Since we make use of a lot of open-source tools, we want to give something back to the community by open-sourcing our service template. Whether you’re interested in using the template directly or simply want to learn more about what a production-quality Python service might look like, we hope this template will be as useful to you as it keeps being for us.

The service template is available on Github. You can simply copy the template, adjust the naming and implement the new logic.

Closing thoughts

In this article, we went over the tooling we use for Python services and the service template that ties them together. Although it’s been working very well for us, we’re always looking at how we can improve further. For instance, the service template doesn’t work retroactively, meaning that applying new standards to existing services still needs to be done manually.

We’ve also recently had our eye on improving our testing standards, such as the inclusion of test automation tools like Tox or Nox. On the ML side, we’ve recently benefited greatly from the use of inference servers like NVIDIA Triton, which may end up significantly impacting what our service template looks like.

All-in-all, we see that there are several aspects to further investigate and improve, so keep an eye out on our publications regarding refinements and additions to our tech stack.

Here are the links to the tool GitHub pages:

June 23, 2022