The Winning Formula

How to find the most appealing and attractive images to engage, excite, and keep your viewers

Whenever you browse through a catalogue of a video service, you find yourself in front of a wide variety of images, all asking for your attention. But if beauty really is in the eye of the beholder, why do we all tend to be drawn to (and like) similar, visually aesthetic images, while ignoring others?

Large production houses invest time creating aesthetically pleasing images to promote their shows and movies to viewers. However, for a large chunk of content that TV operators provide, these images are still missing, especially for live programming.

Instead, their catalogues contain repetitive stock photos that give their platform a dated feel and make it impossible to find the right content quickly. In this era of unlimited content, decreasing attention spans, and a plethora of content-hosting platforms, this means viewership is harder to retain and easier to lose.

This is precisely where Image Distillery™ comes into play: a fully automated service that delivers high-quality and attractive images directly from the broadcast feed or video asset. Let’s explore how we do that.

Figure 1. The following image illustrates the UI before and after the implementation of Image Distillery™.

Testing your way to the perfect thumbnail with data and the naked eye

If you want to pick appealing images from a video, you have some diverse options.

For example …

Having an editorial team that is manually selecting images is a possibility, but it usually ends up being a slow and expensive process, and we all know that time is precious.

Leveraging the power of user data is another alternative, by letting the audience decide which images are resonating most with their preferences. With a user-based approach, you don’t need to define beforehand what makes an image look pretty; you can just verify which thumbnails work well with your user base. For instance, through A/B testing you can select what works well for each user. This method has its advantages, as there is no need to define any metrics regarding what is pretty and you can evaluate what resonates well with large, diverse audiences. Additionally, your continuous experimentation allows for a product that improves its quality over time.

However, this approach has some serious pitfalls. Since the introduction of GDPR, companies require the user’s consent to track them. Most people are not willing to give permission, especially if it is just for better thumbnails. Secondly, this problem suffers from a cold start: what kind of images will you send to your first users? Are they going to be random frames from your video content, or will there be screening first?

Ultimately, this approach can create serious feedback loops with very adverse outcomes. For example, in October 2018, news articles popped up about Netflix supposedly showing thumbnails with actors of the same race as the viewers1. Netflix claimed they did not store this information but that the algorithm inferred this itself.

Lastly, you have the option to train an algorithm to select stills based on content characteristics. With the content-based approach, there is no need to deal with the issues associated with the user-based methodology. Here you only need to develop specific ways to quantify which images work well as thumbnails. To succeed in doing this, you can get help from two areas: our biological wiring, and photography.

The inner highlighter at work

What is pretty might be subjective, but what draws our initial attention is far from random. In general, we follow a precise order when looking at images, and it goes like this:

Human faces > Human bodies > Animal faces > Text > Bright Colors > Everything else

This initial involuntary act is called bottom-up attention. Its counterpart is top-down attention: a slower process and cognitive action that involves an effort, an act of looking for a specific element. (Examples of this are searching for a movie with one of your favourite actors or scrolling around to find F1 cars from the previous day’s race.)

The beauty of (non) randomness

Aside from what catches our attention, what people consider pretty is not entirely random, either. Otherwise, photographers and designers would not have succeeded in their professions. Let’s look at some fairly straightforward rules in photography that make an image stand out.

In general, less is more. You don’t want a picture with many moving parts, but you do want to have a focal point that grabs the attention. One of the most well-known rules in photography is the rule of thirds, which prescribes focusing your lens at ⅓ or ⅔ of the image to capture a well-framed, visually pleasing photo (and it holds for both the horizontal and vertical parts of the picture).

The right usage of colours will draw the viewer’s gaze to the right areas, without being blinded by a full colour palette. Manipulating the color contrast between bright and dark areas is a useful eye-catcher technique, while the correct use of motion blur plays an important role in highlighting a person or object in a frame.

These are just a selection of tips that help you compose shots that are more visually appealing while retaining the attention of the spectator, but there is another element of photography that is more unique to human behavior than it is rooted in technical aptitude, and that is breaking the rules.

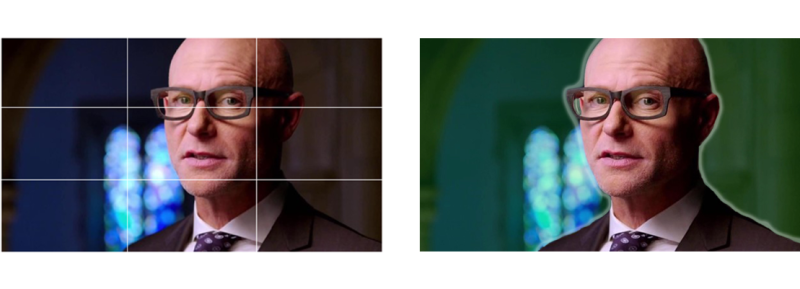

Figure 2. The following visuals illustrate how photography rules can help in the creation of pleasing images. The selected image respects the rule of thirds (as shown on the left), and the focus on the subject is emphasized via a blurry and dark background in contrast with the bright subject’s face (as shown on the right).

And the winner is …

The freedom of the content-based approach, combined with the absence of user data and its related issues, makes it a true winner in the hunt for appealing images. Combining our knowledge of human nature and behavior with the lessons learned from photography lays the groundwork for identifying which images will attract viewers’ attention.

Now that we know how to define the elements that make for a good thumbnail, we can try to encapsulate these into features that a program can understand as well. However, traditional computer vision techniques are not useful for these, since the amount of features you will have to write are simply too numerous.

Instead, we have a convolutional neural network to rate images on a scale from 1 to 10. Training a neural network from scratch would require too much data, so we use a MobileNet architecture and apply transfer learning to improve the quality of the model. In transfer learning, you apply a model from a certain domain and use that knowledge to learn a new domain. The analogy is very similar to how humans learn a language: a Spaniard does not have to learn Italian from scratch, as there is a lot of overlap between the two languages. For a native Japanese speaker, it would be much more difficult to do, as the languages are almost completely different. By using a neural network trained on classifying images, we only need a few thousand examples of images with a rating to get the job done.

More than just “pretty”

The process and journey of searching for and finding appealing and attractive images are highly satisfying, opening the door to user experiences that no longer offer anonymous and repetitive thumbnails. But is beauty on its own, enough?

In this blog, we have demonstrated how video services can create good-looking thumbnails for a better user experience. We showed that there are several ways to do it, and demonstrated how we can build a fully-automated solution that doesn’t require tracking your users. But the perfect thumbnail is more than just pretty; it has to help the user find the content that they are looking for, and give them just that.

¹The Guardian, “Film fans see red over Netflix ‘targeted’ posters for black viewers

July 22, 2021